In order to better understand what language is, Neurosurgery, Neuroscience, Linguistics and Psychology, must continue to unite

A blogpost by Dr. Djaina Satoer, PhD, Clinical Linguist and Assistant Professor at the department of Neurosurgery at the Erasmus MC University Medical Center in Rotterdam

“Language is a human system of communication that uses arbitrary signals, such as voice sounds, gestures and written symbols.”

What is language?

LANGUAGE CONSISTS OF SOUNDS, MEANING AND GRAMMAR

Most people consider the ability to speak as self-evident, whereas the underlying mechanism of language is rather complex. For about three centuries, extensive research has been dedicated by linguists, philosophers, psychologists, neuroscientists and medical doctors to answer the questions as to what is language and where it is located in the brain? Although several debates about the underlying mechanism of language are still ongoing, we can now all agree that language is an arbitrary system. The central idea is that language can be analyzed according to a formal system composed by differential elements, such as sound and meaning. This concept was launched for the first time by Ferdinand de Saussure (1857-1930) who was a Swiss linguist and philosopher and founder of the school of Structuralism. He called the language sound the signified (signifiant) and its meaning the signifier (signifié) (see example Figure below and Cours de Linguistique Générale, 1916 [1]). Furthermore, a distinction was made between language (langue) and speech (parole). Language contains concepts (including sounds and meaning), whereas speech is the actual spoken output we hear in daily life. With only one adaptation of a sound, the meaning of a word can instantly change, e.g. book – look. This theory was able to explain the sound system of many languages across the world. However, the complex system of meaning, grammar and pragmatics could not entirely be captured.

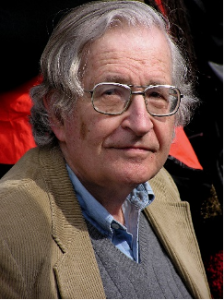

Therefore, a next era of the so-called Generative Linguistics arose and was founded by linguist Noam Chomsky. He expanded the idea of language as an arbitrary system to other layers of language, in particular morphology and syntax (grammar). In his seminal book Syntactic Structures (1957) [2] he argued that language (words and sentences) consist of both an underlying and surface structure. The underlying structure contains information about grammatical aspects (f.i. subject, verb, object: I (subject) eat (verb) an ice-cream (object)) of the sentence whereas the surface structure is the actual spoken output (e.g. the sentence “I eat an ice-cream” or “An ice-cream (object) was eaten (verbs) by me (subject) means the same). Chomsky postulated that humans are born with a so-called innate “Universal Grammar” (UG) and that via spoken language input, certain rules of the language in question are activated or deactivated, such as the right word order (subject, object, verb). This theory was able to explain many cross-linguistic phenomena, however there was resistance from the field of language acquisition and language disorders, as deviations in language could not be well-explained with the theory of UG.

Where is language located in the brain – fixed patterns?

LANGUAGE COMPOSED BY SOUND, MEANING AND GRAMMAR LOCATED IN THE BRAIN

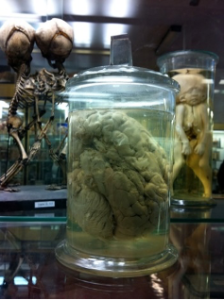

The best way to investigate the neuro-anatomy of language is via impaired speech, i.e. aphasia. Aphasia is an acquired language disorder caused by brain injury, such as a stroke, a trauma, a degenerative disease or a brain tumor. Due to a lesion in language areas of the brain, language can be distorted in various ways. Via case studies and post-mortem research, language functions were identified in the brain by pioneers Paul Broca (1824-1880) and Carl Wernicke (1848-1905). The most famous example is a patient, monsieur Leborgne, examined by Paul Broca at the Bicêtre Hospital who could not speak apart from the word “Tan”. Post-mortem research revealed a lesion in what we now call the inferior frontal gyrus, but classically known as Broca’s area. An aphasia with deficits in language production, in particular grammatical sentence production in combination with intact comprehension (like “monsieur Tan”) was defined Broca’s aphasia. Carl Wernicke encountered the opposite in two patients, namely their language comprehension was impaired and combined with fluent meaningless speech production, which was then called Wernicke’s aphasia. He identified lesions in the superior temporal gyrus, classically known as Wernicke’s area. Later on, Ludwig Lichtheim (1845-1928) introduced the idea that a fibre tract, the arcuate fasciculus, was responsible for information exchange between Broca and Wernicke’s area. Damage to this tract would cause disruption to the language sound system, also known as Conduction aphasia. From a neuro-anatomical point of view it thus seemed possible to pinpoint the different elements of language, sound, meaning and grammar, as formerly introduced by De Saussure and Chomsky (among others) in the brain.

Medical doctors, neuroscientists and linguists united?

LANGUAGE – INDIVIDUAL CARTHOGRAPY

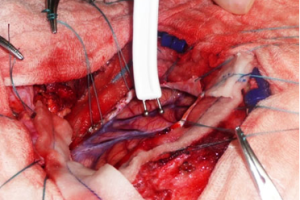

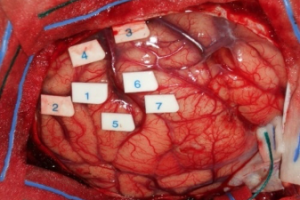

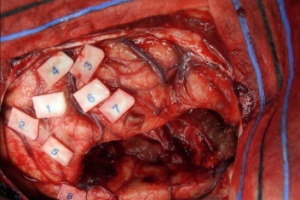

Neuro-imaging and clinical studies in the past century have indicated that the viewpoint of the Broca-Wernicke-Lichtheim language model is rather too simplistic. The field of neurosurgery, in particular awake brain tumor surgery, has contributed significantly to the modern view of the language system in the brain. With awake brain tumor surgery, we aim to remove as much brain tumor as possible without damaging critical language areas so that the patient is still able to communicate after surgery. The neurosurgeon and clinical linguist must work closely together, with the patient and with the entire awake team to establish a successful treatment. With this technique, it is possible to observe and study in real time language deficits induced by direct electro cortico-subcortical stimulation (DES) See Figures A-C below). Via DES language can be temporarily “switched off”, this is evidence by different language or speech errors (see B positive sites) such as: no speech at all (speech arrest), semantic / meaning errors (e.g. car for bus), phonemic / sound errors (e.g. bis for bus), non-existing words or neologisms (e.g. hosk for bus) or articulation problems (dysarthria). Wide-spread cortical and subcortical areas well beyond the classical Broca’s and Wernicke’s area and the arcuate fasciculus were found to be associated with sound, meaning and grammar of language as well as large individual variety.

The field of Neurosurgery, in particular awake brain tumor surgery, has contributed significantly to the modern view of the language system in the brain.

A recent important finding is that a white matter tract, the frontal aslan tract, is associated with speech initiation and so-called spontaneous speech. Spontaneous speech is the most natural form of daily speech where all separate linguistic levels, e.g. sound, meaning and grammar, come together (see also Satoer et al. 2021) [3].

Where are we going?

TOWARDS A COMPUTIONAL LANGUAGE MODEL

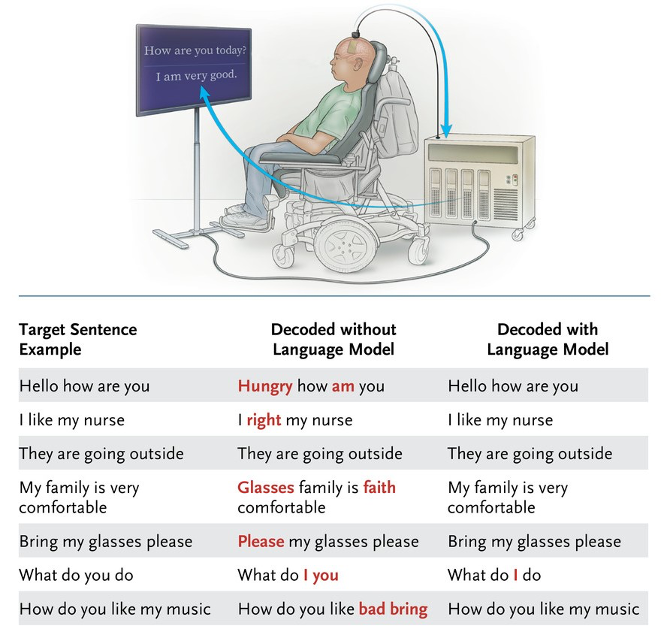

A revolutionary invention of a speech lab at the UC of San Francisco was launched last year [4] and concerned a paralyzed person who was able to speak again via a computational language model. They implemented a neuroprothesis in the brain of the patient which consists of an electrode that is able to detect brain signals responsible for muscle activation of the articulators. The patient was trained extensively in multiple sessions with a fixed set of words and asked to make an attempt to produce these words while his brain activity was monitored via the prosthesis. Based on these brain wave patterns, a computational language model could be developed including the fixed set of trained words. By connecting the prosthesis to a software system in which the computational model was installed, the (most probable) word(s) (candidates) the person thought of could be visualized on a screen (see Figure below). See a demonstration video below:

In conclusion, in order to better understand what language is, where it is located in the brain and to treat patients with aphasia, clinicians and researchers from multiple fields, e.g. Neurosurgery, Neuroscience, Linguistics and Psychology, must continue to unite!

Want to read more? See also the following Nature Human Behavior commentary.

Biography

Djaina Satoer (PhD) is Clinical Linguist and Assistant Professor at the department of Neurosurgery at the Erasmus MC University Medical Center in Rotterdam, The Netherlands. As a clinical linguist her expertise concerns language monitoring before, during and after awake brain tumor surgery. She studied French Language and Culture at Utrecht University and received her master degree in 2007. Thereafter in 2009, she obtained her research master of arts in Linguistics at Utrecht University. She then started gaining clinical experience at the department of Neurosurgery at the Erasmus MC between 2009 and 2011. She studied the effects of awake brain surgery on language and received her PhD degree in 2014. In 2014 she joined the group of Neurolinguistics as an assistant professor and coordinator of the Groninger Center of Expertise for Language and Communication Disorders at the University of Groningen next to her clinical and post-doc position in Rotterdam. She now holds a full-time position as clinical linguist and assistant professor at Rotterdam since 2018 and she is leading the group of Clinical Linguistics. With this team and other co-workers she investigates perioperative language functioning in brain tumor patients, which can be directly used for patient counseling, and she also develops aphasia tests for neurological population.

References

De Saussure, F. et al. (1916). Cours de linguistique générale. Paris: Payot.

Chomsky, N. (1957). Syntactic Structures. Mouton & Co.

Satoer, D. et al. (2021). Spontaneous Speech. In: Mandonnet, E., Herbet, G. (eds) Intraoperative Mapping of Cognitive Networks. Springer, Cham.

Moses D.A. et al. (2021). Neuroprosthesis for Decoding Speech in a Paralyzed Person with Anarthria. N Engl J Med. 2021 Jul 15;385(3):217-227.